I really wanted to use some real data within my project, initially I looked into using soundcloud to play music, but Unity doesn’t support streaming MP4 files which was what soundcloud files are (apparently). Spotify also was a no go as it didn’t have a suitable API – which was surprising. So then I looked at Facebooks API – which was made for unity (games). So im terms of the flow for their API, when you in the Unity Editor it shows a different (mockup) GUI which would respond differently to when you build the game (and upload it to facebooks server). The issue with this is that I couldn’t upload my project to facebook’s server so this part of my previsualisation only works within the Unity Editor (It switches off when you’re outside of the editor and you just go straight to the facebook story panel).

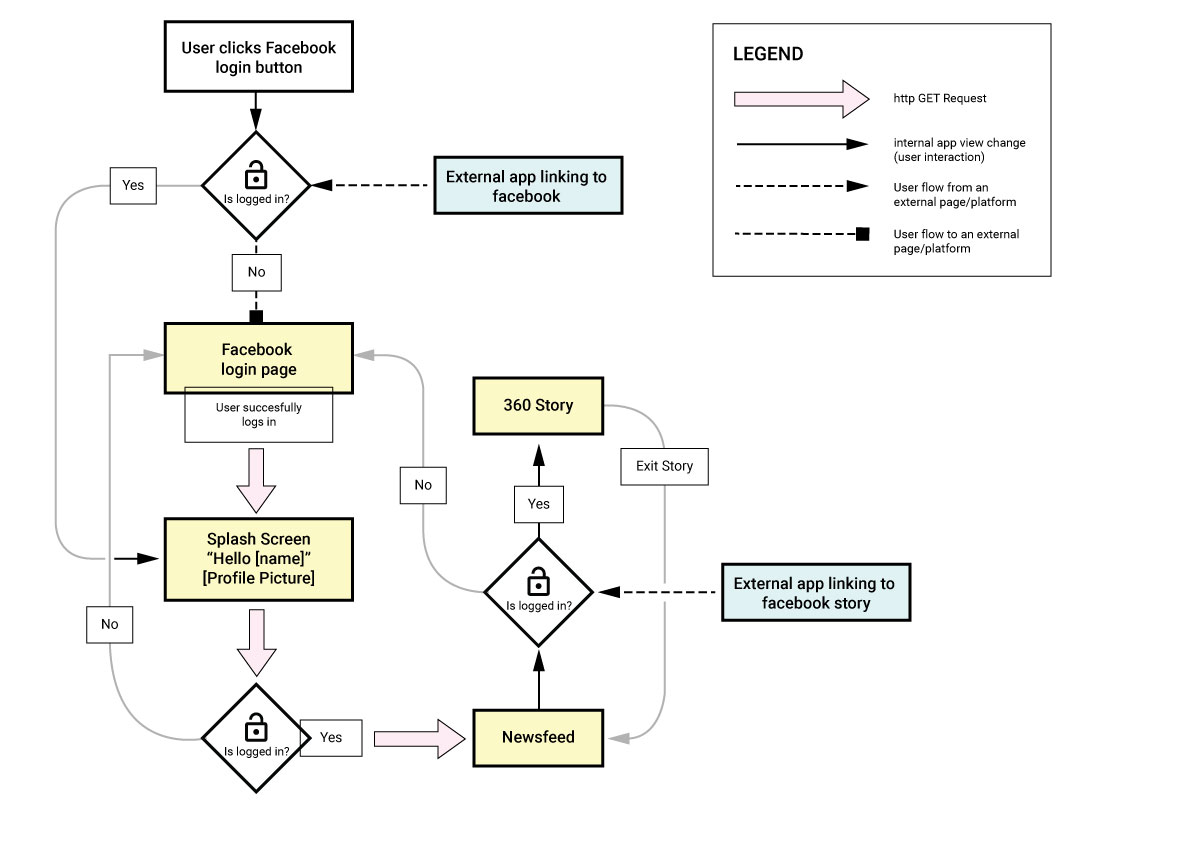

Below shows the user flow of the facebook panel, I used this flowchart to guide my scripting for the ‘Facebook API Manager’ script

Eye Scanning

At the moment there has been a lot of attention/hype about eye tracking within HMD’s, with this in mind I think it would be feasible within the near future that eye scanning within a HMD will be possible and necessary. In terms of a point of reference, I think this would be of similar user experience to finger scanning on mobiles phones (as a form of identification). Eye scanning would fix current UX problems where the user has to log in to Facebook/give permission via their browser. The future is here.