Virtual Reality Hand Interface Design

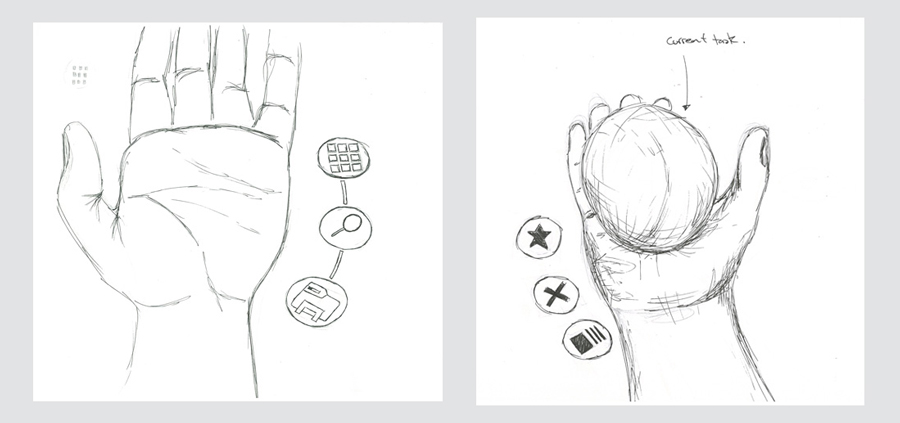

I was thinking about how the user would be able see/use ‘event relevant actions’. For example: when a user has opened a video they should be able to:

- close the video

- move to the next video

- delete video ect.

I was thinking about how this would be used, and where this menu would be placed within the environment. It would need to be hidden when it isn’t need by the user which would make it difficult to have it positioned where the main navigation is placed – this would also be assumed to be unexpected placement for an event specific UI.

I looked into this idea of having the UI as an extension of the hand, inspired by Leap Motion’s innovative designs. In terms of real life ‘hand UIs’ this reminded me of thumb painting trays which used a similar concept for a similar purpose. The tray itself is bound to the user’s thumb and they are selecting paint using a paint brush held by their opposite hand. The palettes of the paint tray are positioned around the hand. In the second image, I highlight the points of interests – I was quite interested in the positioning of the palettes specifically. Later on I tested these positions using buttons.

In terms of UX of a thumb painting tray, an issue that I always had while using a paint tray was that the hand holding the tray had to be positioned to a specific angle for it to be used – this is something I will try to solve while recreating this idea in VR.

I really liked this idea of having a Hand user-interface of which the actions were relevant to the mode/app that the user was in. When the user is accustomed to this, they will quickly be able to find action relevant options without having to search the environment as they know the location of their hands (which will be motion tracked).

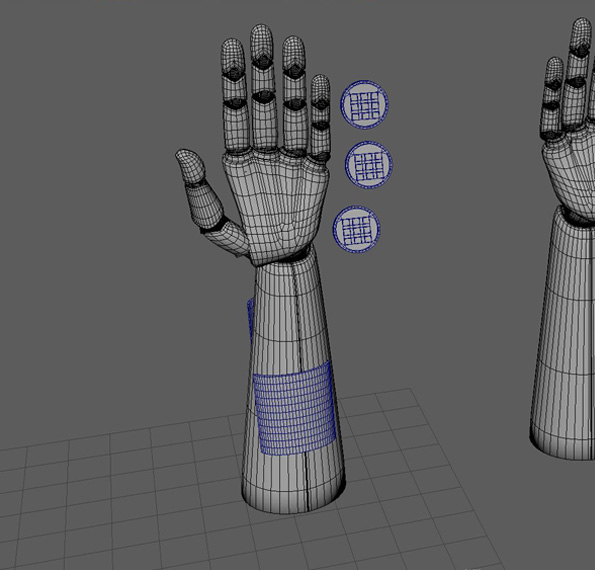

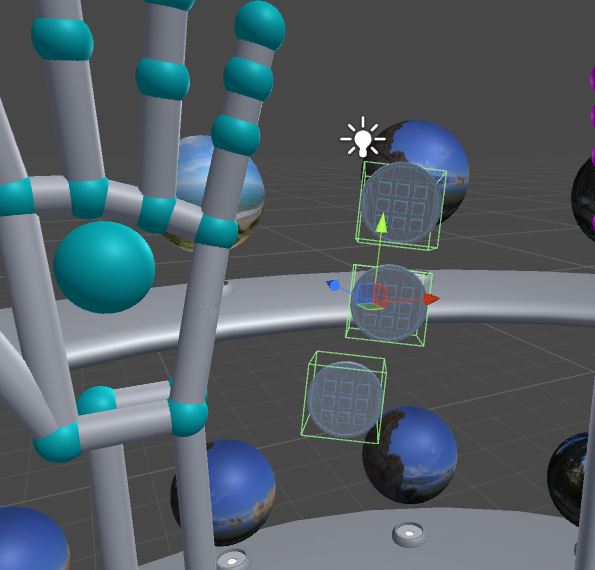

I created a demo scene in Maya using a hand template provided by Leap Motion to further exploring UI positioning. I then exported this into Unity and tested different button positions to get a better feel for these buttons. I tested different colours, sizes and relative positions to the hands.

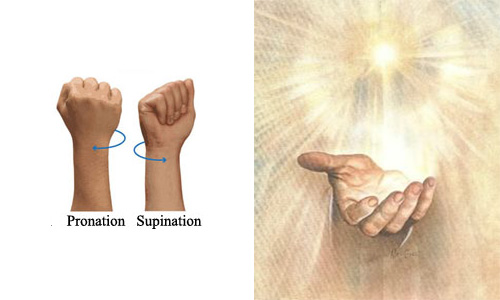

In terms of opening the UI, I wanted to create a unique experience of which made the user feel in control of the event/app. For this, I looked at the position of which the hand was in when the user wanted to use the hand UI (palm upwards) – therefor the UI would not need to be seem unless the palm was facing upwards – which opens the UI, this reminded me of like a hand of god, perhaps due to the user having ‘power’ via body movement.

I also looked into the parenting of the UI to the hand. Initially, I had the UI parented on all axis’s in terms of its position and rotation. This was quite fidgety as the rotation jitters quite substantially because the hands are being tracking via marker less tracking. Making the experience stronger, I looked at removing z-axis tracking (see image to right for axis example) and fixing the z-axis. This also removes the issue described earlier which exists in real life thumb painting trays.